I’m uncontainably excited to report that the ggplot2 extension package ggalt is now on CRAN.

The absolute best part of this package is the R community members who contributed suggestions and new geoms, stats, annotations and integration features. This release would not be possible without the PRs from:

- Ben Bolker

- Ben Marwick

- Jan Schulz

- Carson Sievert

and a host of folks who have made suggestions and have put up with broken GitHub builds along the way. Y’all are awesome.

Please see the vignette and graphics-annotated help pages for info on everything that’s available. Some highlights include:

- multiple ways to render splines (so you can make those cool, smoothed D3-esque line charts :-)

geom_cartogram()which replicates the old functionality ofgeom_map()so your old mapping code doesn’t break anymore- a re-re-mended

coord_proj()(but, read on about why you should be re-thinking of how you do maps inggplot2) - lollipop charts (

geom_lollipop()) - dumbbell charts (

geom_dumbbell()) - step-ribbon charts

- the ability to easily encircle points (beyond those boring ellipses)

- byte formatters (i.e. turn

1024to1 Kb, etc) - better integration with

plotly

If you do any mapping in ggplot2, please follow the machinations of geom_sf() and the sf package. Ed and the rest of the R spatial community have done 100% outstanding work here and it is going to change how you think and work spatially in R forever (in an awesome way). I hope to retire coord_proj() and geom_cartogram() some day soon thanks to all their hard work.

Your contributions, feedback and suggestions are welcome and encouraged. The next steps for me w/r/t ggalt are ensuring 100% plotly coverage since it’s the best way to make your ggplot2 plots interactive. There are a few more additions that didn’t make it into this release that I’ll also be integrating.

Please make sure to say “thank you” to the above contributors if you see them in person on on the internets. They’ve done great work and are exemplary examples of how awesome and talented the R community is.

The ? Resistance

I need to be up-front about something: I’m somewhat partially at fault for ? being elected. While I did not vote for him, I could not in any good conscience vote for his Democratic rival. I wrote in a ticket that had one Democrat and one Republican on it. The “who” doesn’t matter and my district in Maine went abundantly for ?’s opponent, so there was no real impact of my direct choice but I did actively point out the massive flaws in his opponent. Said flaws were many and I believe we’d be in a different bad place, but not equally as bad of a place now with her. But, that’s in the past and we’ve got a new reality to deal with, now.

This is a (hopefully) brief post about finding a way out of this mess we’re in. It’s far from comprehensive, but there’s honest-to-goodness evil afoot that needs to be met head on.

Brand Damage

You’ll note I’m not using either of their names. Branding is extremely important to both of them, but is the almost singular focus of ?. His name is his hotel brand, company brand and global identifier. Using it continues to add it to the history books and can only help inflate the power of that brand. First and foremost, do not use his name in public posts, articles, papers, etc. “POTUS”, “The President”, “The Commander in Chief”, “?” (chosen to match his skin/hair color, complexion and that comb-over tuft) are all sufficient references since there is date-context with virtually anything we post these days. Don’t help build up his brand. Don’t populate historical repositories with his name. Don’t give him what he wants most of all: attention.

Document and Defend with Data

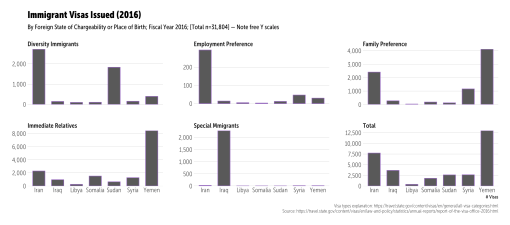

Speaking of the historical record, we need to be blogging and publishing regularly the actual facts based on data. We also need to save data as there’s signs of a deliberate government purge going on. I’m not sure how successful said purge will be in the long run and I suspect that the long-term effects of data purging and corruption by this administration will have lasting unintended consequences.

Join/support @datarefuge to save data & preserve the historical record.

Install the Wayback Machine plugin and take the 2 seconds per site you visit to click it.

Create blog posts, tweets, news articles and papers that counter bad facts with good/accurate/honest ones. Don’t make stuff up (even a little). Validate your posits before publishing. Write said posts in a respectful tone.

Support the Media

When the POTUS’ Chief Strategist says things like “The media should be embarrassed and humiliated and keep its mouth shut and just listen for a while” it’s a deliberate attempt to curtail the Press and eventually there will be more actions to actually suppress Press freedom.

I’m not a liberal (I probably have no convenient definition) and I think the Press gave Obama a free ride during his eight year rule. They are definitely making up for that now, mostly because their very livelihoods are at stake.

The problem with them is that they are continuing to let themselves be manipulated by ?. He’s a master at this manipulation. Creating a story about the size of his hands in a picture delegitimizes you as a purveyor of news, especially when — as you’re watching his hands — he’s separating families, normalizing bigotry and undermining the Constitution. Forget about the hands and even forget about the hotels (for now). There was even a recent story trying to compare email servers (the comparison is very flawed). Stop it.

Encourage reporters to focus on things that actually matter and provide pointers to verifiable data they can use to call out the lack of veracity in ?’s policies. Personal blog posts are fleeting things but an NYT, WSJ (etc) story will live on.

Be Kind

I’ve heard and read some terrible language about rural America from what I can only classify as “liberals” in the week this post was written. Intellectual hubris and actual, visceral disdain for those who don’t think a certain way were two major reasons why ? got elected. The actual reasons he got elected are diverse and very nuanced.

Regardless of political leaning, pick your head up from your glowing rectangles and go out of your way to regularly talk to someone who doesn’t look, dress, think, eat, etc like you. Engage everyone with compassion. Regularly challenge your own beliefs.

There is a wedge that I estimate is about 1/8th of the way into the core of America now. Perpetuating this ideological “us vs them” mindset is only going to fuel the fires that created the conditions we’re in now and drive the wedge in further. The only way out is through compassion.

Remember: all life matters. Your degree, profession, bank balance or faith alignment doesn’t give you the right to believe you are better than anyone else.

FIN (for now)

I’ll probably move most of future opines to a new medium (not uppercase Medium) as you may be getting this drivel when you want recipes or R code (even though there are separate feeds for them).